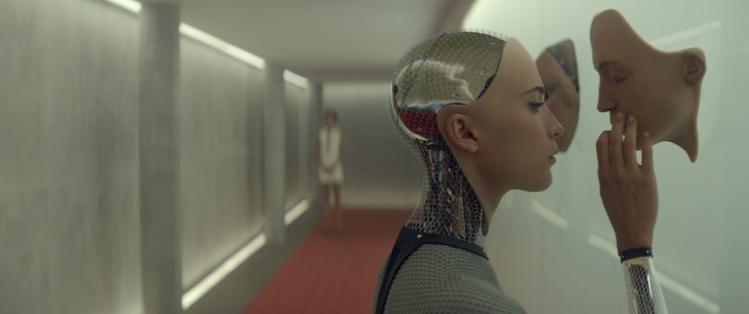

Do you say “thank you” to Siri or Alexa? I have friends who do. They’re a bit sheepish about it, since they know the voices are just electronic systems set up to simulate human interactions. But if you watch the movie Her you may start to wonder whether a much more sophisticated operating system just might be a person: even a person you could love. A recent Ian McEwan short story (“Düssel...” in the July 18 issue of the New York Review of Books) makes plausible fiction of a human-robot love affair. And, of course, a bevy of films, from Star Wars to Ex Machina, and TV shows like Westworld, imagine worlds in which it’s at least tempting to think of robots as people.

Let’s think about a case less dramatic than movie scenarios but much closer to what might actually happen fairly soon. I bring home my new Apple iPal (as the ads said, “Everybody needs a pal!”): a humanoid robot designed to provide companionship to lonely people. The robot has a pleasant and responsive face, moves easily without mechanical jerks, converses fluently about standard topics, has a surprising sense of humor, and offers informed and sympathetic advice about my job and personal life. For a while, I may think of it as just an enhanced Siri, but after prolonged contact—and maybe a few upgrades in the self-learning software—my iPal relates memories of our time together, expresses joy or sorrow about things that happen to us, and sometimes talks sincerely about how much our relationship means. I don’t forget that I’m interacting with a robot—a machine, not a human being—but I find myself thinking that this is someone who does care about me, and about whom I have come to care—not just a pal, but a friend. If we really are friends, how could my friend, even though a machine, not be a person?

But here we need to be careful. An iPal is a computer. Can computers actually think? Well, they are designed to perform functions that humans perform through thinking. They expertly process information, present it at appropriate points in a conversation, and use it to draw reasonable conclusions. But thinking in this sense can just be a kind of high-level functioning. It’s another question whether iPals’ calculations are accompanied by a subjective awareness of what they’re doing. Maybe, like math calculators, they generate output without being literally aware of doing the calculations or of what the calculations mean.

But even if we allow that my iPal is somehow aware of the intellectual functions it performs, there’s the much more important question of whether it has the sensory and emotional experiences human beings have. Here once again we need to distinguish between functioning and awareness. We may say that an electric “eye” that opens a door when someone approaches “sees” that person. But we don’t think there’s any seeing going on in the literal sense of an internal, subjective awareness. The “eye” just performs the reactive function that we could, in a quite different way, perform with our capacity for visual awareness.

What I need, then, is to find out if my iPal is actually aware: whether it has the internal, subjective experiences that go on when we are thinking, perceiving with our five senses, or feeling emotions or pain. Here my question converges with a famous philosophical conundrum, the problem of other minds.

This is the problem of how we can know whether other people do in fact have minds—or, to put it another way, whether other people have the internal subjective experiences we do. I’m saying experiences that we have, but the question is really how I know that anyone besides me has inner experiences. Once the question is raised, it suggests the dizzying prospect of solipsism: the philosophical view—or pathological delusion—that I alone exist as a conscious being. Note also that the question is not whether others might have experiences different from mine (say, seeing as green what I see as red) but whether others might have no subjective experiences at all—whether the mental lights might be entirely out.

Why would such a bizarre problem occur to me? Because I have direct introspective access to my own inner experiences, but not to those of anyone else. I can just “see” (though not with my eyes) that I am thinking it’s going to rain, looking at an interesting face, or feeling a pain in my knee. Introspection can, of course, be unreliable: I may be feeling envy when I think of my friend’s new Rolex, but believe that I’m just puzzled at his consumerist values. Yet even if I’m sometimes wrong about the nature of my inner experiences, I can at least be sure that I’m having them. I have no such direct knowledge that other people have an inner life.

Raising doubts about whether other people have an inner mental life is a prime example of the skeptical philosophical arguments that David Hume (who himself devised some of the best) ironically defined as arguments that are unanswerable but also utterly ineffective. We see their logical force but continue to believe what they say we should doubt. Doubting that other people have experiences is something we just can’t live with.

But as I get to know my iPal, the problem of other minds becomes highly relevant. Here is a case in which I might well decide there is just no mind there. But how to make the decision?

We might think that a decision isn’t needed. Why should it matter whether my iPal has subjective experience? But it matters enormously—first because causing others unnecessary pain is a major way (if not the only way) of acting immorally. If iPals can suffer, then we have a whole set of moral obligations that we don’t have if they can’t suffer.

But it also—and especially—matters for the question of whether a robot could really be my friend. Friendship has various aspects, some of which might apply to my relation to a robot. The robot might, for example, be pleasant to be with or help me carry out projects. But one essential aspect of friendship is emotional. Friends feel with and for one another; they share one another’s joys and sufferings. In this key respect, a robot without subjectively experienced feeling could not be my friend. How could I know that my iPal actually has subjective experiences?

In the case of other humans, the classic “argument for other minds,” as J. S. Mill formulated it, is based on an analogy. I know that my inner subjective states are often expressed by my body’s observable behavior. Sadness leads to crying, pain to grimacing; speech expresses thoughts. Since I observe others crying, grimacing, and speaking, it’s at least probable that their bodily behaviors likewise express subjective states. An apparently decisive objection to this reasoning is that the analogy is based on only one instance. What reason do I have to think that I’m a typical example of a human being? For all I know, I’m the rare exception in which subjective states cause bodily behavior, while in everyone else brain states alone cause bodily behavior.

But there may be a way to retool this argument from analogy. Besides knowing that my mental states can cause my behavior, I also know that my brain states cause my mental states. Too much wine and I feel woozy, too much caffeine and I feel hyperalert. I know quite a lot about these connections from my own experience, and there’s every reason to think that exhaustive neuroscientific study of my brain would reveal that virtually all my mental life is produced by neural processes. But if it’s a scientific fact that my neural processes produce subjective experiences, why think that these neural processes don’t work the same way in other people’s brains? There’s no evidence that there’s a special set of neurological laws for my brain.

But even if this argument makes a good case for other humans having subjective experiences, it doesn’t help with my iPal, whose observable behavior is produced by a very different physical structure than the human brain. We don’t have evidence that this other physical structure causes subjective experiences, so there’s no reason to think that my iPal has such experiences. But without subjective experiences my iPal cannot be a person and so cannot be my friend.

We’re left, then, with a new version of the problem of other minds. Is there any way to tell whether a robot (something not governed by the laws of human neuroscience) has inner subjective experiences? Unlike the traditional problem, this may soon be a matter of pressing practical importance.